Why Traditional UIs Break Down When Agents Take Over

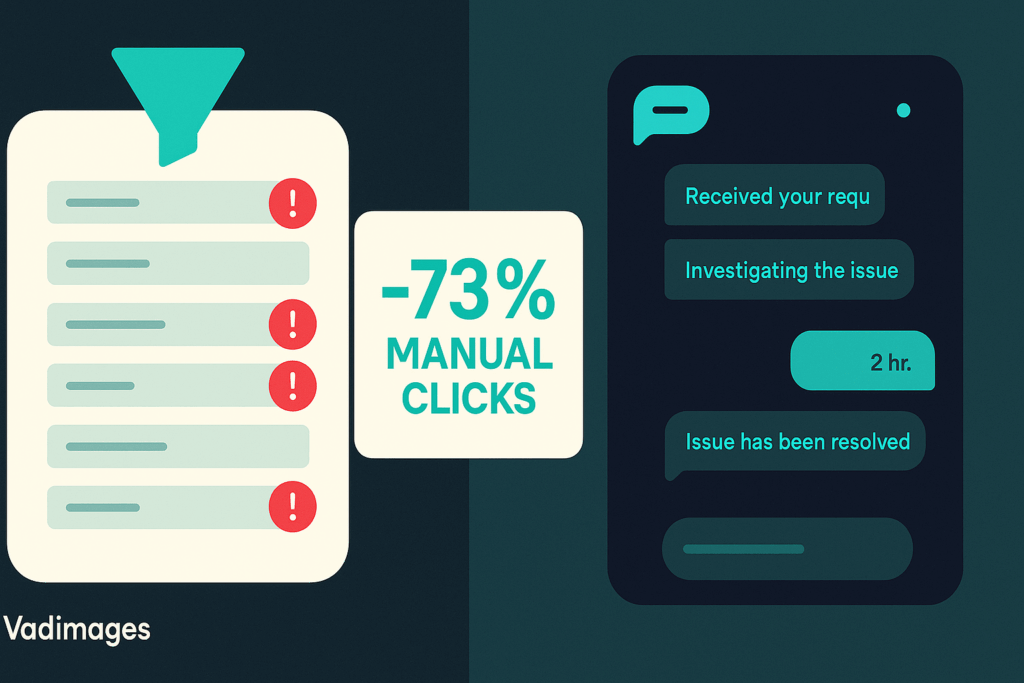

American small and mid-sized businesses spent most of the 2010s perfecting dashboards and drag-and-drop workflows, only to discover in 2025 that those patterns buckle when autonomous agents drive the work. A lead-capture sequence that once asked marketers to fill eight fields now needs an interface that lets a GPT-4-class agent curate, validate, and route that lead without human clicks. When you force an agent through screens designed for people, latency spikes, hand-offs fail, and the very efficiency you hired the AI for evaporates. Warmly’s recent survey of SMB owners confirms the frustration: forty-eight percent cite “UI mismatch” as the top reason pilot projects stall.

Specialized agent interfaces untangle that choke point by translating high-volume intent into the structured, low-ambiguity payloads that LLM-driven workers crave. They surface “explanation panes” instead of tooltips, log decision traces in plain English, and queue real-time interventions only when confidence drops below a threshold you set. The result is a user experience that feels more like a conversation with a trusted lieutenant than babysitting a black box.

A Pragmatic Blueprint for Agentic UX

Designing for agents starts with four pillars highlighted in the latest Agentic UX framework: perception, reasoning, memory, and agency.

Perception layers collect data in structured blocks—think JSON snippets behind every card—so your agent never scrambles to parse raw HTML. Reasoning layers expose model prompts and intermediate chains for auditability, turning opaque “thoughts” into readable narratives that non-technical staff can trust. Memory layers attach context windows to each session so tasks persist across days instead of resetting at every call. Finally, agency layers gate irreversible actions behind configurable policies—much like role-based access control in human apps—so your finance bot never wires funds twice.

Vadimages bakes this blueprint into a React-based starter kit that ships with reusable pattern libraries: confirm-or-correct modals, vector-search–backed memory drawers, and streaming token visualizers that show the agent “thinking” in milliseconds. Ringg AI’s voice-first expansion underscores why that matters: customers adopt the platform precisely because launching a voice agent “is as effortless as sending a WhatsApp message.” The easier you make deployment, the faster a mid-market brand turns autonomous ambition into operational lift.

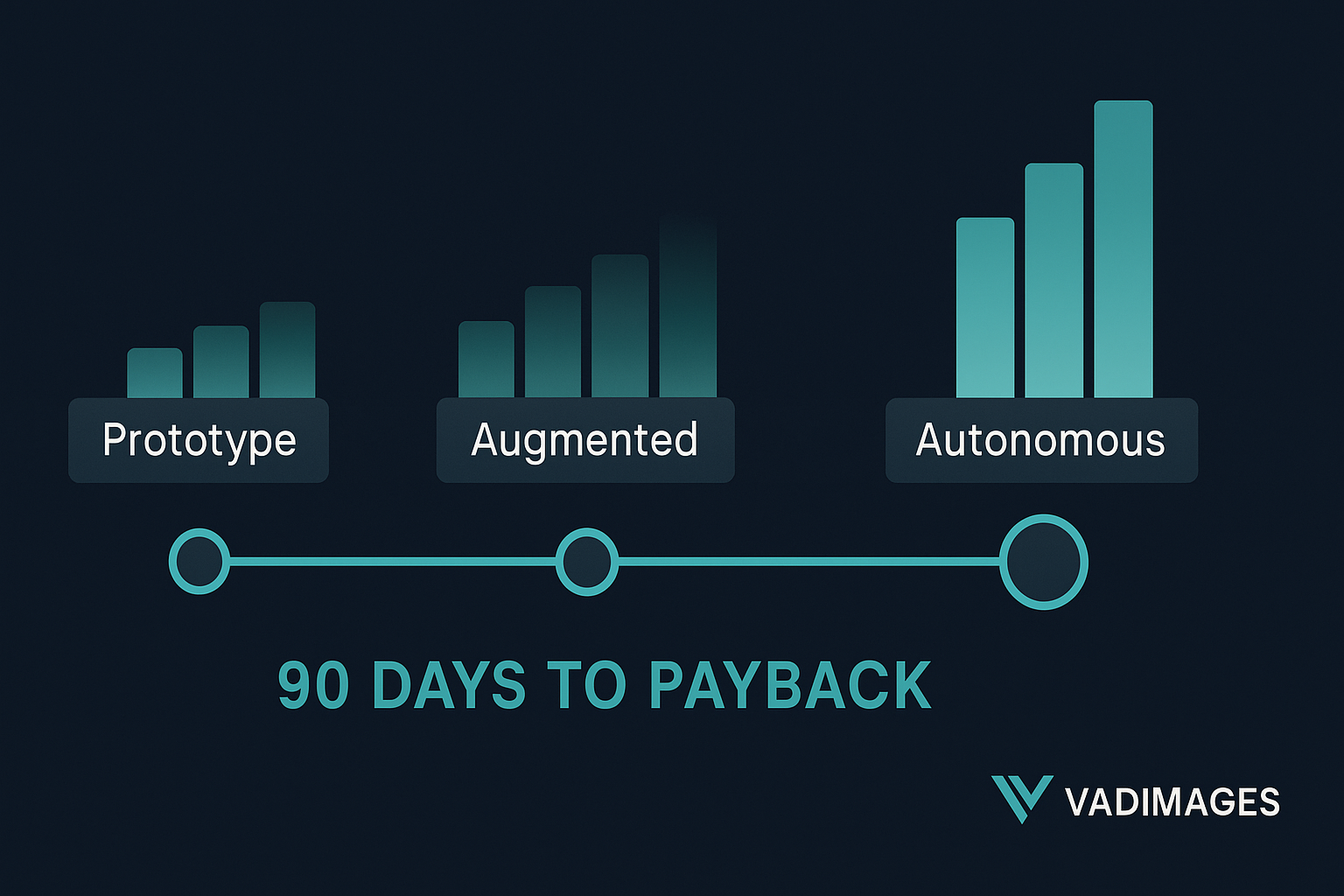

From Pilot to Production: Your 90-Day Interface Roadmap

Day 0–30: Prototype a narrow, high-value workflow—customer refund approvals, inventory re-orders, quote generation—inside the starter kit. Map every human decision into structured intents and let the agent run in “shadow mode,” logging recommendations while staff retain veto power.

Day 31–60: Promote the agent to “augmented” status. It acts automatically under $500 thresholds or low-risk scenarios, while routing edge cases to supervisors via Slack and email digests. At this stage, our Vadimages telemetry panel watches for drift in model confidence, surfaces daily precision/recall metrics, and reminds you when to retrain embeddings. Medium’s deep dive on Agentic AI warns teams not to skip this statistical hygiene; context shifts faster than you expect.

Day 61–90: Flip the switch to “fully autonomous” for the scoped workflow, then rinse and repeat on adjacent tasks. Businesses that follow this cadence report a 38 percent decrease in customer-wait time and a 24 percent bump in first-contact resolution, according to Aalpha’s May 2025 benchmark.

Why Vadimages Is the Partner Who Makes It Stick

Agentic UX is not just another Figma exercise. It demands deep familiarity with streaming APIs, vector databases, and safety scaffolds—skills most in-house teams juggle only on weekends. Vadimages has spent eighteen years translating bleeding-edge tech into revenue-ready products, and our U.S. clients love that we price engagements like builders, not bodies. Every project includes a dedicated AI safety lead, nightly regression tests in a staged sandbox, and a performance SLA measured in milliseconds, not marketing fluff.

Ready to see what autonomy feels like when the interface helps instead of hinders? Book a discovery call at vadimages.com/contact and we’ll send a live demo that reroutes your busiest support queue to an agentic console before your next coffee refill.