Google’s AI Overviews compress the web into tidy summaries. That does not mean SMBs should accept fewer clicks. It means your pages must be engineered to be the calm, canonical source the summary leans on and, crucially, the place a motivated searcher visits when the overview sparks intent. The winners are not those who shout the loudest, but those who structure answers so machines can quote them and humans can act on them. This article is a practical playbook from Vadimages for adapting content, FAQs, and schema so your pages still earn attention, visits, and revenue inside AI-heavy results.

Why AI Overviews Change the Click Game for SMBs

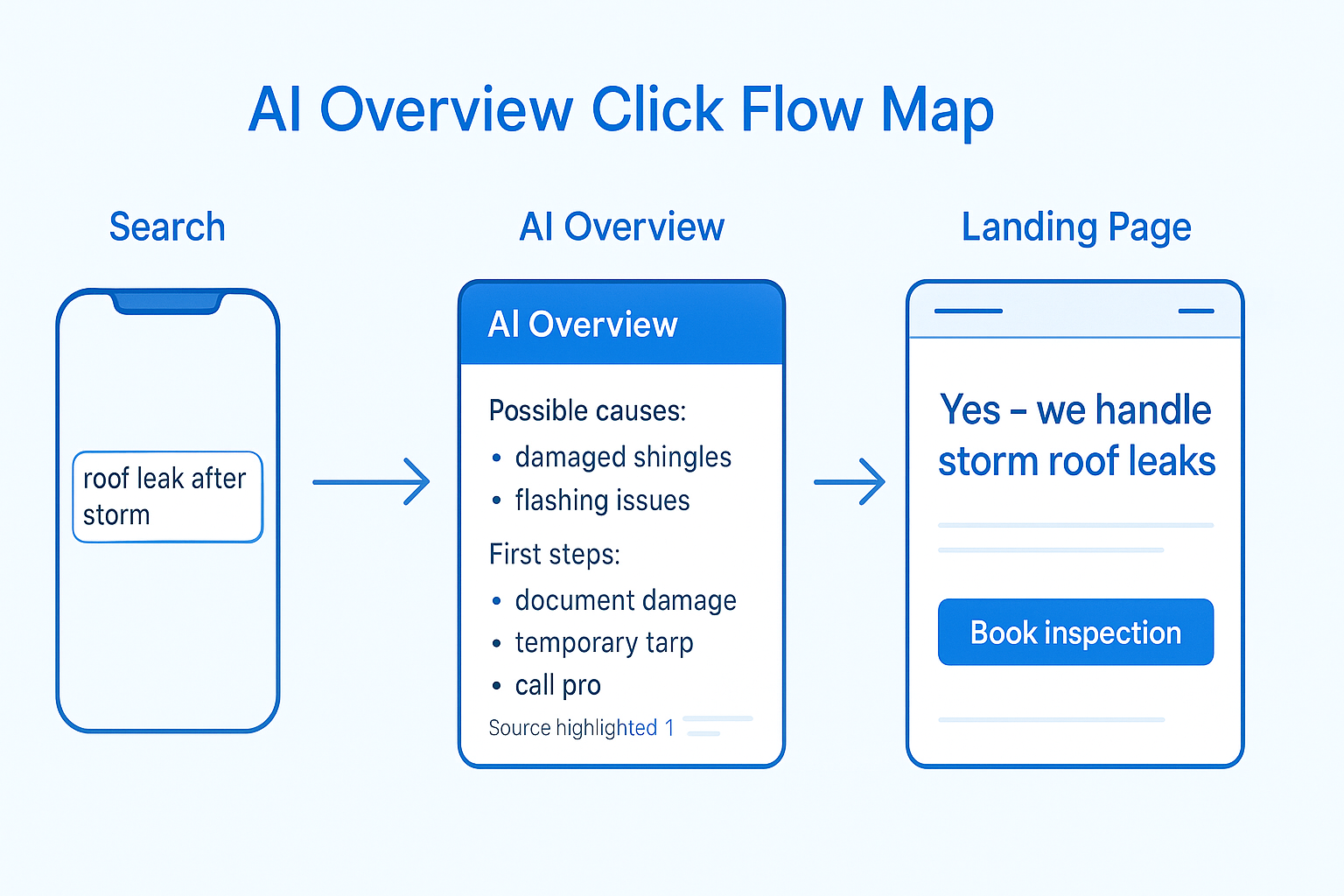

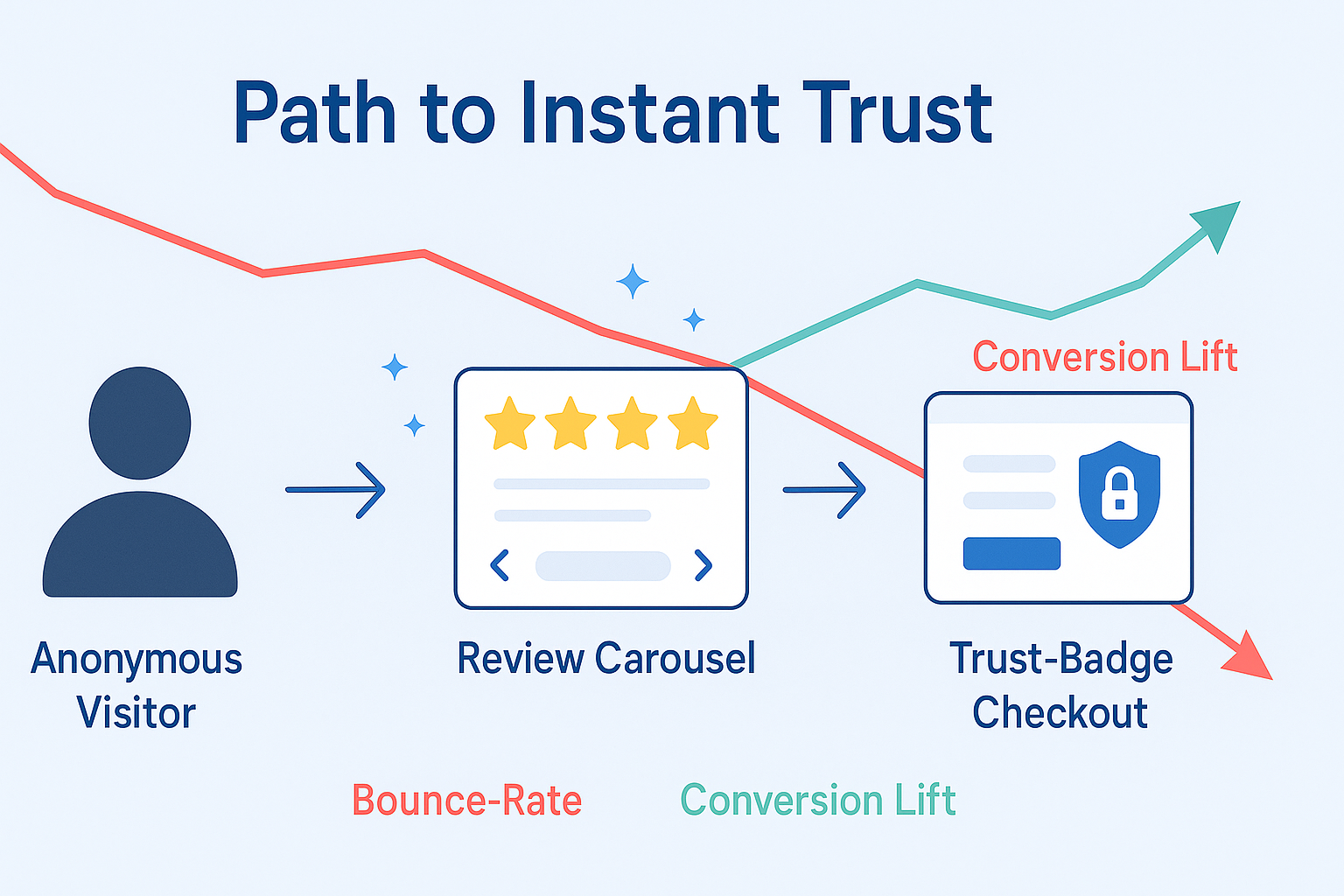

Traditional blue links rewarded broad topical coverage and catchy title tags. AI Overviews reward clarity at the very top of the page, consistent factual scaffolding throughout, and explicit next steps aligned with searcher intent. A homeowner with a flooded basement wants a first move, a price range, and a phone number, and the AI card will try to provide all three. If your page opens with a long brand story or hides contact details below fold, you are training the model to look elsewhere. If your answer leads with a precise, source-ready statement, followed by a short evidence block and a clean action, you train the model to quote you and you train the human to click.

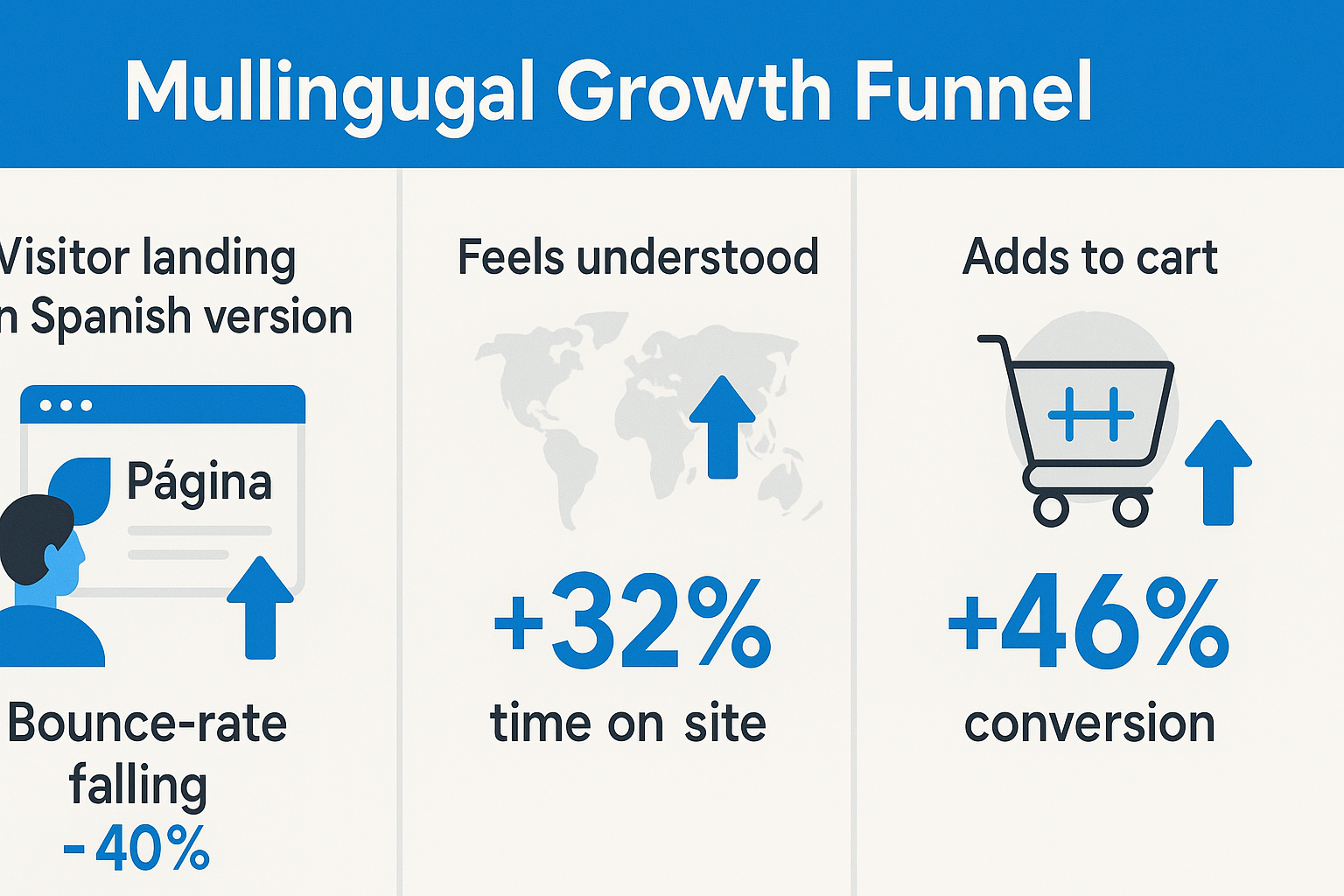

This shift is most visible on commercial and local intent queries where the AI summary attempts to explain a problem and propose a solution pathway. For US SMBs in home services, healthcare, legal, education, and retail repair, the page that wins is the page that reduces uncertainty in the first screen, proves proximity and availability in the second, and offers a zero-friction conversion in the third. The technical layer matters as much as the prose. Consistent NAP data, complete LocalBusiness schema, working fast on mobile, and clear policy pages help the model trust you. Clean Q&A markup and sectioned headings help the model find extractable sentences. A thoughtful internal link after each compact answer guides the motivated reader into a deeper conversion journey even when the AI shows much of your summary for free.

A Practical Playbook: Structure, FAQs, and Schema That Survive Summaries

Start with the fold and write for two audiences at once. For the model, supply a crisp one-to-two sentence answer that could be copied into an overview without losing meaning. For the human, follow immediately with a short consequence statement and a concrete next step. A water damage company might open with the time window before mold risk rises, then offer a same-day inspection link. A pediatric clinic might lead with age-specific dosing guidance context and then a “call now” block with hours. These openers should be free of hedging, include a relevant noun phrase that matches common query wording, and avoid brand-first language until the first action is displayed.

Treat FAQs as strategic content, not filler. Cluster questions around intents that AI Overviews frequently assemble: definition, diagnosis, prevention, cost, availability, and eligibility. Each answer should be a miniature landing page with a sentence that can stand alone, a supporting clarification that adds nuance the model can cite, and a soft CTA or internal link that moves the reader toward either a booking page or an educational resource. Resist the urge to stack dozens of questions. Fewer, stronger entries reduce duplication and improve extractability.

Use headings like signposts rather than slogans. The model does not need clever copy to interpret your content hierarchy; it needs consistent labels. If you serve Jacksonville, FL, say so in the heading and first paragraph of the section that describes your service radius, and echo it in your schema with a serviceArea and address components. If you publish a price range, indicate what is included, state assumptions, and add a “request a firm quote” micro-form near the paragraph. The more your prose and schema align, the more likely you will be treated as the stable source that summaries rely on.

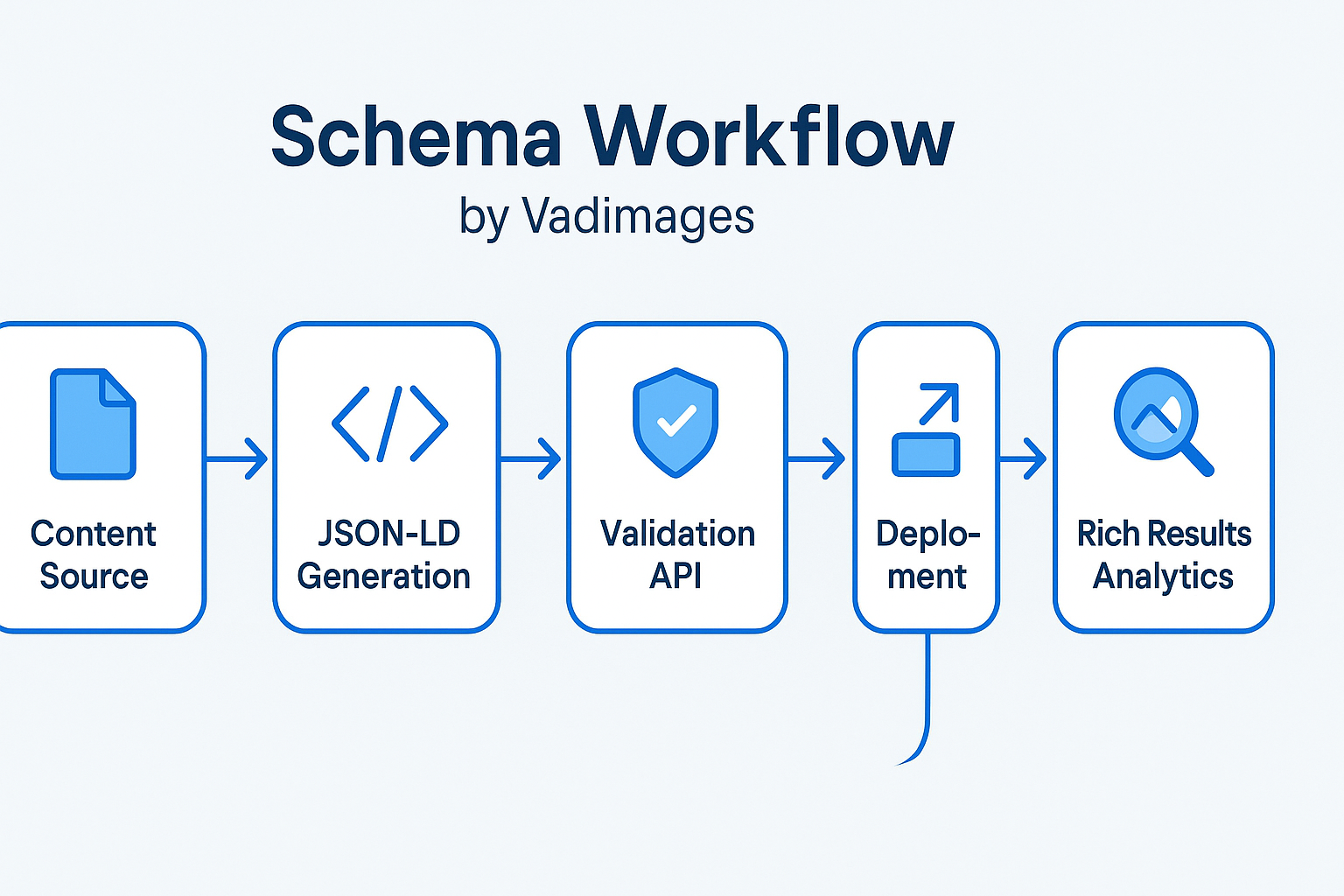

Codify your truth with schema, but keep it human-led. For most SMBs, a backbone that combines Organization or LocalBusiness, Service, and either FAQPage or QAPage is sufficient. The goal is to mirror the visible content and avoid over-promising. If your hours vary seasonally, represent it visibly and structurally. If you offer emergency service, put it in the opening paragraph, in a short “availability” note, and in openingHoursSpecification. If you publish testimonials, keep the Review markup honest and connected to real text on the page.

Here is a compact JSON-LD example that aligns a local service page, a service offering, and an FAQ block. The visible page should show the same facts in the same order so the model can triangulate.

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "LocalBusiness",

"@id": "https://example.com/#roofing-jax",

"name": "Atlantic Roof & Repair",

"url": "https://example.com/roof-repair/jacksonville-fl",

"image": "https://example.com/assets/roof-hero.jpg",

"telephone": "+1-904-555-0199",

"priceRange": "$$",

"address": {

"@type": "PostalAddress",

"streetAddress": "512 Oak Ridge Ct",

"addressLocality": "Jacksonville",

"addressRegion": "FL",

"postalCode": "32259",

"addressCountry": "US"

},

"areaServed": [

{"@type":"City","name":"Jacksonville"},

{"@type":"City","name":"St. Johns"}

],

"openingHoursSpecification": [

{"@type":"OpeningHoursSpecification","dayOfWeek":["Monday","Tuesday","Wednesday","Thursday","Friday"],"opens":"07:00","closes":"19:00"},

{"@type":"OpeningHoursSpecification","dayOfWeek":["Saturday","Sunday"],"opens":"08:00","closes":"16:00"}

],

"sameAs": ["https://www.facebook.com/atlanticroofjax","https://www.google.com/maps?cid=1234567890"],

"makesOffer": {

"@type": "Offer",

"priceCurrency": "USD",

"availability": "https://schema.org/InStock",

"itemOffered": {

"@type": "Service",

"name": "Emergency Roof Leak Repair",

"areaServed": "Jacksonville, FL",

"providerMobility": "static",

"serviceType": "Roof repair"

}

}

}

</script>

<script type="application/ld+json">

{

"@context":"https://schema.org",

"@type":"FAQPage",

"mainEntity":[

{

"@type":"Question",

"name":"What should I do first if my roof leaks after a storm?",

"acceptedAnswer":{"@type":"Answer","text":"Move electronics, place a bucket, and call a licensed roofer within 24 hours to reduce mold risk. Atlantic Roof & Repair offers same-day inspections in Jacksonville, FL."}

},

{

"@type":"Question",

"name":"How much does emergency roof repair cost in Jacksonville, FL?",

"acceptedAnswer":{"@type":"Answer","text":"Most emergency leak repairs range from $250 to $900 depending on the damage and roof type. You’ll receive a firm quote on site after the inspection."}

}

]

}

</script>For businesses that publish deep procedural content, consider QAPage markup when a single, primary question governs the page, supported by a definitive answer near the top. The content must reflect that structure. When the page is broader, rely on a strong H1 and H2 hierarchy and reserve FAQPage for direct, narrow questions that complement the main narrative rather than duplicate it.

Speed and stability are invisible ranking levers that matter more when summaries satisfy basic curiosity before the click. If a user decides to visit, the page must render instantly. Compress hero images, inline critical CSS, and lean on server-side rendering for the first screen. Use descriptive, short headings that match the AI’s language without stuffing. Keep tables and short paragraphs near the top so extractors can lift them faithfully. Where you present prices, explain ranges and link to a calculator or a booking flow that pre-fills the request with the details from the page. This subtle continuity tells both human and machine that your answer is not generic; it is operational.

Local trust signals remain decisive for US SMBs. Embed your city, neighborhood, and ZIP code in natural sentences and in your schema. Include a short “Why us in [City]” paragraph that mentions turnaround times and common local conditions. Publish a service radius map or a one-sentence note stating exact coverage. Surface review count and recency in text near your CTA while keeping Review markup compliant. Align the content of your Google Business Profile with the claims on the page so the model sees the same truth reflected across the ecosystem.

Finally, frame every answer with a next step. After a concise definition, invite a diagnostic. After a cost range, invite a personalized quote. After an eligibility explanation, invite a two-minute pre-check. Design these as short, visually consistent blocks that appear immediately after the paragraph the model is most likely to quote. If the AI Overview gives away your definition, your site still owns the path to the solution.

Here is a second snippet that pairs a service calculator with a HowTo section for extractable steps while keeping the action nearby.

<script type="application/ld+json">

{

"@context":"https://schema.org",

"@type":"HowTo",

"name":"Stop a minor roof leak before the pro arrives",

"estimatedCost":{"@type":"MonetaryAmount","currency":"USD","value":"0-20"},

"step":[

{"@type":"HowToStep","name":"Protect the area","text":"Move electronics and furniture; place a bucket under the drip."},

{"@type":"HowToStep","name":"Relieve ceiling water","text":"If a bulge forms, carefully puncture at the lowest point to drain into the bucket."},

{"@type":"HowToStep","name":"Call a licensed roofer","text":"Book a same-day inspection in Jacksonville, FL to prevent mold and structural damage."}

],

"tool":[{"@type":"HowToTool","name":"Bucket"},{"@type":"HowToTool","name":"Tarp"}]

}

</script>The visible page should present these same steps in clear prose and, directly beneath them, a compact booking module. Keep forms minimal, show hours inline, and repeat the phone number in text rather than hiding it in an image. Trust badges, license numbers, and insurance statements should sit near the action for credibility that both humans and machines can parse.

Measuring Impact and Iterating Without Guesswork

You cannot optimize what you cannot see, so set up measurement that observes the two moments that matter most in the age of summaries. The first is whether your answers are being surfaced and read in the results; the second is whether your page, when visited, converts the motivated reader. While you will not always receive a label that says an AI Overview used your text, you can triangulate progress by tracking impressions and click-through rate on the queries where you provide definitional and diagnostic answers, watching for changes after you restructure pages and deploy schema. Segment performance by question-shaped keywords, by “near me” variations, and by city names to understand how local clarity influences behavior.

On the page, measure the scroll depth to the first CTA, the interaction rate with that CTA, and the time to first interaction on mobile. Connect phone taps to the query where possible and aggregate by intent class rather than obsessing over individual keywords. When you add a new FAQ cluster or adjust your opening paragraph to be more extractable, annotate the change and observe the downstream effects on both impressions and on-page actions over the following weeks. A small improvement in the conversion rate of visitors who arrive after seeing an answer in the results can outweigh a small decline in raw clicks if the visitors who do arrive are now more decisive.

Iterate at the section level rather than rewriting entire pages. Replace an abstract opener with a crisp, source-ready fact. Expand a thin FAQ answer into a two-sentence miniature that states the action and the condition that changes the action. Tighten a meandering heading into a label that mirrors how people ask the question. Unify your business hours and pricing claims across site, profile, and schema so the model no longer has to arbitrate between conflicting versions of your truth. The goal is not to chase an algorithmic trick; it is to become the reliable, local expert whose answers travel well from your page into summaries without losing fidelity.

Work With Vadimages: An AI-Ready Content and Schema Sprint for SMBs

Vadimages helps US small and mid-sized businesses turn service pages into summary-ready, conversion-ready assets. We pair senior content strategists with technical SEOs and schema engineers in a focused two-week sprint. Day one maps your high-value queries to intents and identifies the pages most likely to be summarized. Day three restructures openings, headings, and FAQs into extractable, human-first patterns. Day five ships LocalBusiness, Service, and FAQPage or QAPage JSON-LD that mirrors the visible content. Day seven delivers performance fixes for the first screen, simplified forms, and mobile UI alignment. Day ten measures early impact and plans the next iteration. You receive a plain-English playbook you can reuse across locations and services, plus a checklist your internal team can run before publishing new pages.

If you are a home services brand in Florida, a dental practice in Texas, a boutique law firm in Illinois, or an after-school program in California, we adapt the same principles to your compliance and local realities. We know how to present eligibility, pricing ranges, and coverage areas in ways that reduce risk and increase clarity. We implement the schema that matches what your page truly says and we help operations maintain it. Most importantly, we make sure the paragraph that earns the quote in the results is followed immediately by the button that earns you the customer.

You can test us on one high-value page, then expand. We will refactor a single service or location page, deploy aligned schema, and set up the measurement you need to judge results with confidence. If you like what you see, we convert the playbook into a reusable template for your CMS and train your staff to keep shipping pages that win in AI Overviews without sacrificing conversions.

Reach out at Vadimages to start your AI-ready content and schema sprint. Tell us your three most valuable queries, your service territory, and your bottlenecks, and we will return a plan that respects your brand voice and your operational constraints. The AI era belongs to SMBs who make truth obvious, action immediate, and structure machine-readable. We would love to help you lead.